Imagine being able to find a specific book in a vast library in a matter of seconds. This is made possible by binary search algorithms, a fundamental concept in computer science that relies on the mathematical foundations of algorithms.

Understanding the math behind algorithms is crucial for developing efficient software applications. Sorting algorithms explanation is another critical aspect of computer science, as it enables data to be organized and retrieved quickly.

By grasping these concepts, developers can create more efficient and scalable software systems.

Key Takeaways

- Binary search algorithms enable fast data retrieval.

- Understanding the math behind algorithms is crucial for efficient software development.

- Sorting algorithms explanation is essential for data organization.

- Efficient algorithms are critical for scalable software systems.

- Mathematical foundations of algorithms are vital for computer science.

The Fundamental Building Blocks of Algorithms

To comprehend complex algorithms, one must first understand their basic building blocks. Algorithms are essentially a set of instructions designed to perform a specific task, and their effectiveness is rooted in their fundamental structure.

What Makes an Algorithm?

An algorithm is a well-defined procedure that takes some input and produces a corresponding output. It’s not just about writing code; it’s about understanding the mathematical principles that drive the algorithm’s efficiency and accuracy. Algorithm complexity analysis is crucial in determining how scalable an algorithm is.

The Language of Algorithms: Pseudocode and Notation

Pseudocode and notation are the languages used to describe algorithms. Pseudocode is a simplified, human-readable representation of an algorithm that omits the details of the programming language syntax. It’s used to convey the logic of the algorithm without getting bogged down in implementation specifics.

Let’s consider a simple example of finding the maximum number in a list:

- Initialize max to the first element of the list.

- Compare each subsequent element to max.

- If an element is greater than max, update max.

- After iterating through the entire list, max will hold the maximum value.

This process can be represented in pseudocode, making it easier to understand and analyze the algorithm’s complexity.

| Operation | Description | Complexity |

|---|---|---|

| Initialization | Set max to the first element. | O(1) |

| Comparison | Compare each element to max. | O(n) |

| Update | Update max if a larger element is found. | O(n) |

Understanding these fundamental elements is crucial for analyzing and optimizing algorithms, a key aspect of mathematics in computer science.

The Math Behind Algorithms: From Binary Search to Sorting Algorithms

Understanding the math behind algorithms is essential for appreciating their complexity and efficiency. Algorithms, whether for binary search or sorting, rely on mathematical foundations to operate effectively.

Mathematical Foundations of Algorithm Design

The design of algorithms is deeply rooted in mathematical concepts. Discrete mathematics, including combinatorics and graph theory, plays a crucial role in algorithm development. These mathematical disciplines provide the tools necessary for analyzing and optimizing algorithm performance.

How Mathematics Drives Algorithmic Efficiency

Mathematics drives algorithmic efficiency by providing methods to analyze and predict performance. One key mathematical concept used in this analysis is recurrence relations.

Recurrence Relations and Algorithm Analysis

Recurrence relations are equations that define a sequence of values recursively. They are particularly useful in analyzing the time complexity of algorithms, especially those that use divide-and-conquer strategies like binary search and merge sort. By solving recurrence relations, developers can predict the performance of algorithms on large datasets, enabling them to optimize their code effectively.

For instance, the time complexity of binary search can be expressed using a recurrence relation, which simplifies to O(log n), indicating that binary search is very efficient even for large datasets.

Understanding Algorithm Complexity

Understanding the complexity of algorithms is vital for assessing their performance and scalability. Algorithm complexity refers to the amount of resources, such as time or space, required to execute an algorithm.

Big O Notation Explained

Big O notation is a mathematical notation that describes the upper bound of an algorithm’s complexity. It provides a way to measure the worst-case scenario of an algorithm’s performance. For instance, binary search algorithms have a time complexity of O(log n), making them highly efficient.

Time vs. Space Complexity

Time complexity refers to the amount of time an algorithm takes to complete, while space complexity refers to the amount of memory it requires. There’s often a trade-off between the two. For example, some algorithms may use more memory to achieve faster execution times.

The Importance of Asymptotic Analysis

Asymptotic analysis is crucial for understanding how an algorithm’s complexity changes as the input size increases. It helps in predicting the algorithm’s performance on large datasets.

Growth Rate Comparisons

Different algorithms have different growth rates. For instance, binary search has a logarithmic growth rate, making it much more efficient than linear search for large datasets.

| Algorithm | Time Complexity | Space Complexity |

|---|---|---|

| Binary Search | O(log n) | O(1) |

| Linear Search | O(n) | O(1) |

Binary Search: Divide and Conquer in Action

The binary search algorithm is a quintessential example of the divide and conquer strategy in action. It’s a method used to efficiently locate a target value within a sorted array by repeatedly dividing the search interval in half.

The Mathematical Principle Behind Binary Search

Binary search relies on the mathematical principle that a sorted array can be divided into smaller subarrays, allowing for efficient searching. This principle is rooted in the concept of ordered sets, where elements are arranged in a specific order, enabling the algorithm to make informed decisions about where to search next.

Step-by-Step Binary Search Algorithm

The binary search algorithm works as follows:

- Start with a sorted array and a target value to search for.

- Compare the target value to the middle element of the array.

- If the target value is less than the middle element, repeat the process with the left half of the array.

- If the target value is greater, repeat with the right half.

- Continue until the target value is found or the subarray is empty.

Calculating Binary Search Efficiency

The efficiency of binary search can be calculated by analyzing the number of comparisons required to find the target value. This is typically expressed using Big O notation, which provides an upper bound on the number of operations.

Mathematical Proof of O(log n) Complexity

The time complexity of binary search is O(log n), where n is the number of elements in the array. This can be proven mathematically by considering the number of comparisons required to reduce the search space by half at each step. With each comparison, the size of the search space is halved, leading to a logarithmic number of comparisons. Specifically, for n elements, it takes at most log2n comparisons to find the target value, demonstrating the efficiency of binary search.

The Logarithmic Nature of Binary Search

Binary search stands out for its efficiency, rooted in its logarithmic time complexity. This characteristic makes it significantly faster than linear search, especially for large datasets.

Why Binary Search is O(log n)

The binary search algorithm achieves its O(log n) complexity by dividing the search space in half with each iteration. This division reduces the number of comparisons needed to find an element. The mathematical principle behind this is based on the logarithm’s property of reducing the problem size exponentially.

Comparing Linear vs. Logarithmic Growth

To understand the efficiency of binary search, it’s crucial to compare linear and logarithmic growth. Linear growth increases directly with the size of the input, whereas logarithmic growth increases much more slowly. This difference becomes particularly significant as the dataset size grows.

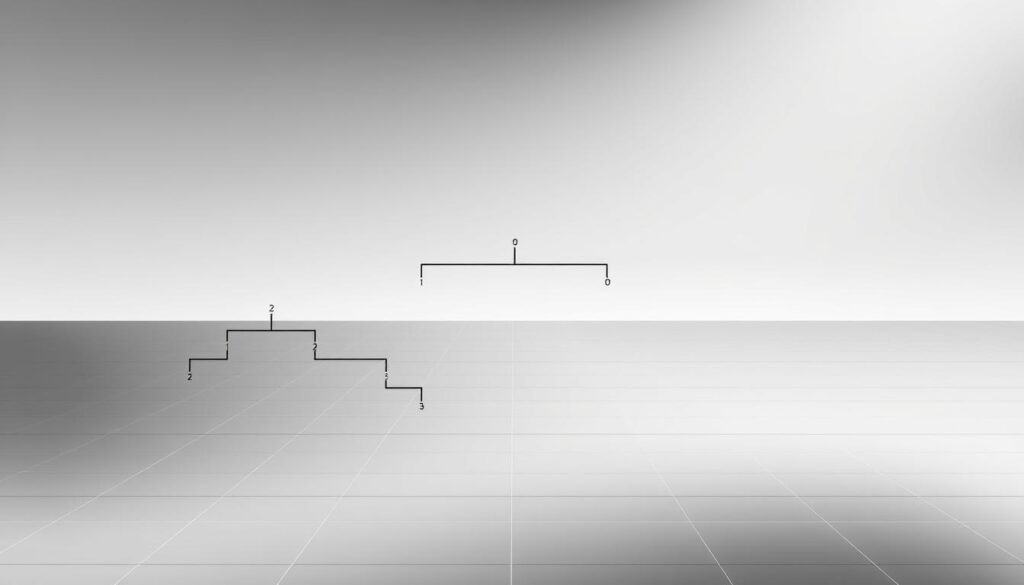

Visualizing Logarithmic Efficiency

Visualizing the difference between linear and logarithmic growth can help illustrate why binary search is so efficient.  A graphical representation typically shows a linear search having a straight line with a positive slope, while binary search is represented by a curve that flattens out, indicating its superior efficiency for large datasets.

A graphical representation typically shows a linear search having a straight line with a positive slope, while binary search is represented by a curve that flattens out, indicating its superior efficiency for large datasets.

In conclusion, the logarithmic nature of binary search makes it an indispensable algorithm in computer science, particularly for applications involving large datasets. Its efficiency is a direct result of its mathematical foundations.

Elementary Sorting Algorithms and Their Mathematical Analysis

The world of sorting algorithms is vast, but it begins with understanding the elementary algorithms that form the backbone of data organization. These fundamental algorithms, while not the most efficient for large datasets, provide a crucial foundation for understanding more complex sorting techniques.

Bubble Sort: The Mathematical Simplicity

Bubble Sort is one of the simplest sorting algorithms, working by repeatedly swapping the adjacent elements if they are in the wrong order. This process continues until the list is sorted. The simplicity of Bubble Sort makes it a great teaching tool, but its inefficiency for large lists is notable. Mathematically, Bubble Sort has a worst-case and average time complexity of O(n^2), where n is the number of items being sorted.

Selection Sort: Finding the Minimum

Selection Sort improves upon Bubble Sort by reducing the number of swaps. It works by dividing the list into two parts: the sorted part at the left end and the unsorted part at the right end. Initially, the sorted part is empty, and the unsorted part is the entire list. The smallest element is selected from the unsorted array and swapped with the leftmost element, and that element becomes a part of the sorted array. This process continues until the list is fully sorted. Like Bubble Sort, Selection Sort has a time complexity of O(n^2).

Insertion Sort: Building the Sorted Array

Insertion Sort is another simple sorting algorithm that builds the final sorted array one item at a time. It’s much like sorting a hand of cards. The array is virtually split into a sorted and an unsorted region. Each subsequent element from the unsorted region is inserted into the sorted region in its correct position. Insertion Sort also has an average and worst-case time complexity of O(n^2), making it less efficient for large lists.

Comparing Quadratic Time Complexities

All three algorithms discussed have a quadratic time complexity, which makes them less efficient for sorting large datasets. However, they have different performance characteristics for different types of input. For instance, Insertion Sort performs well on nearly sorted lists, while Bubble Sort and Selection Sort do not.

| Algorithm | Best-Case Time Complexity | Average Time Complexity | Worst-Case Time Complexity |

|---|---|---|---|

| Bubble Sort | O(n) | O(n^2) | O(n^2) |

| Selection Sort | O(n^2) | O(n^2) | O(n^2) |

| Insertion Sort | O(n) | O(n^2) | O(n^2) |

Understanding these elementary sorting algorithms is crucial for grasping more advanced sorting techniques. While they may not be the most efficient for large datasets, their simplicity and specific use cases make them valuable tools in a programmer’s toolkit.

Divide and Conquer Sorting Algorithms

The divide and conquer approach is a cornerstone of algorithmic design, enabling the creation of efficient sorting algorithms like Merge Sort and Quick Sort. This method involves breaking down a problem into smaller sub-problems, solving each sub-problem, and then combining the solutions to solve the original problem.

Merge Sort: The Mathematics of Merging

Merge Sort is a classic example of a divide and conquer algorithm. It works by recursively dividing the input array into two halves until each half contains one element, and then merging these halves in a sorted manner. The merging process is key to Merge Sort’s efficiency.

The recurrence relation for Merge Sort is given by T(n) = 2T(n/2) + O(n), where T(n) represents the time complexity of sorting an array of n elements. The term 2T(n/2) accounts for the recursive division of the array into two halves, and O(n) represents the time taken to merge these halves.

Solving T(n) = 2T(n/2) + O(n)

To solve this recurrence relation, we can use the Master Theorem, which provides a straightforward way to determine the time complexity of divide and conquer algorithms. For Merge Sort, the solution to the recurrence relation yields a time complexity of O(n log n), making it efficient for sorting large datasets.

Quick Sort: Partitioning and Pivoting

Quick Sort is another divide and conquer algorithm that sorts an array by selecting a ‘pivot’ element and partitioning the other elements into two sub-arrays, according to whether they are less than or greater than the pivot. The sub-arrays are then recursively sorted.

- Quick Sort’s average-case time complexity is O(n log n).

- However, in the worst-case scenario, its time complexity can degrade to O(n^2).

Understanding the mathematical principles behind these algorithms is crucial for optimizing their performance in various applications.

Advanced Sorting Techniques and Their Mathematical Foundations

Advanced sorting techniques have revolutionized data processing by providing mathematically sound methods for organizing large datasets. These algorithms are crucial in various applications, from database management to scientific computing.

Heap Sort and Binary Heaps

Heap Sort is an efficient sorting algorithm that utilizes a binary heap data structure. The algorithm works by visualizing the elements of the array as a special kind of complete binary tree. The heap property ensures that the parent node is either greater than (in a max heap) or less than (in a min heap) its child nodes. This property is crucial for the sorting process, as it allows for the efficient extraction of the maximum or minimum element. The time complexity of Heap Sort is O(n log n), making it suitable for large datasets.

Radix Sort: When Comparisons Aren’t Needed

Radix Sort is a non-comparative integer sorting algorithm that sorts data with integer keys by grouping keys by the individual digits (or by their radix). This algorithm is particularly useful when the range of input data is not significantly greater than the number of values to sort. Radix Sort’s efficiency comes from its ability to avoid comparisons, instead relying on the distribution of elements into buckets based on their digits. The time complexity of Radix Sort is O(nk), where n is the number of elements and k is the number of digits in the radix sort.

Counting Sort: Linear Time Sorting

Counting Sort is another non-comparative sorting algorithm that operates by counting the number of objects having distinct key values. It is efficient when the range of input data is not significantly greater than the number of values to sort. Counting Sort has a time complexity of O(n + k), where n is the number of elements and k is the range of input. This makes it particularly useful for sorting integers within a known range.

Mathematical Proofs for Non-Comparison Sorts

The efficiency of non-comparison sorts like Radix Sort and Counting Sort can be attributed to their ability to exploit the properties of the input data. For instance, the mathematical proof for the efficiency of Counting Sort lies in its ability to directly map the input values to their positions in the output array, resulting in linear time complexity.

Understanding the mathematical foundations of these advanced sorting techniques is crucial for appreciating their efficiency and applicability in various computational contexts.

The Mathematics of Algorithm Optimization

The art of algorithm optimization is underpinned by mathematical rigor, ensuring algorithms perform at their best. Optimizing algorithms involves a deep understanding of their mathematical foundations, which is crucial for developing efficient computational methods.

Average Case vs. Worst Case Analysis

When analyzing algorithms, it’s essential to distinguish between average-case and worst-case scenarios. Average-case analysis provides insight into an algorithm’s typical performance, while worst-case analysis offers a guarantee on its maximum running time.

- Average-case analysis helps in understanding the expected behavior of an algorithm.

- Worst-case analysis is critical for applications where predictability is key.

Amortized Analysis Techniques

Amortized analysis is a method used to analyze the performance of algorithms over a sequence of operations. It’s particularly useful for data structures like dynamic arrays or stacks.

- Identify the operations to be analyzed.

- Calculate the total time for a sequence of operations.

- Divide the total time by the number of operations to get the amortized cost.

Mathematical Proofs for Algorithm Correctness

Mathematical proofs are essential for verifying the correctness of algorithms. Techniques such as induction and contradiction are commonly used.

Example: Proving the correctness of a sorting algorithm involves showing that it correctly sorts any input array.

The Master Theorem and Its Applications

The Master Theorem is a powerful tool for solving recurrence relations that arise in the analysis of divide-and-conquer algorithms.

It provides a straightforward way to determine the time complexity of many algorithms, making it an indispensable tool in algorithm design.

Visualizing Algorithms: Understanding Through Representation

Through visualization, the abstract concepts of algorithms, such as binary search algorithms, become more concrete and easier to analyze. Visual representation aids in understanding the step-by-step execution of algorithms, making it simpler to grasp their complexity and efficiency.

Mathematical Models for Algorithm Visualization

Mathematical models form the backbone of algorithm visualization. These models help in creating graphical representations that illustrate how algorithms operate on different data structures. By leveraging mathematical principles, developers can create interactive visualizations that demonstrate the dynamics of algorithms like sorting and searching.

Decision Trees and Algorithm Behavior

Decision trees are a powerful tool for visualizing the behavior of algorithms, especially those involving comparisons like sorting algorithms. They illustrate the sequence of decisions made during the execution of an algorithm, providing insights into its operational complexity.

Using Visualization to Understand Complexity

Visualization plays a crucial role in understanding the complexity of algorithms. By representing algorithms graphically, developers can better analyze their time and space complexity, identifying potential bottlenecks and areas for optimization.

| Algorithm | Time Complexity | Space Complexity |

|---|---|---|

| Binary Search | O(log n) | O(1) |

| Merge Sort | O(n log n) | O(n) |

| Quick Sort | O(n log n) | O(log n) |

By utilizing visualization techniques, developers can gain a deeper understanding of data structure algorithms explanation and improve their ability to analyze and optimize algorithmic performance.

Real-World Applications of Binary Search and Sorting

The principles behind binary search and sorting algorithms are applied in diverse fields, transforming how data is managed. These algorithms are not just theoretical constructs; they have a significant impact on various technological domains.

Database Systems and Indexing

In database systems, binary search is used to quickly locate data. Indexing in databases often relies on sorting algorithms to maintain data in a structured order, enabling faster query responses. This is crucial for applications that require rapid data retrieval.

Search Engines and Information Retrieval

Search engines utilize sorting algorithms to rank search results. The efficiency of these algorithms directly affects the speed and relevance of search results. Binary search is also employed in information retrieval systems to quickly find relevant data.

Machine Learning Algorithm Optimization

In machine learning, sorting and searching algorithms are fundamental. They are used in data preprocessing, feature selection, and in the algorithms themselves, such as in decision trees. Optimizing these algorithms is crucial for the performance of machine learning models.

Practical Implementation Considerations

When implementing binary search and sorting algorithms, several factors must be considered:

- Data size and complexity

- Hardware and software constraints

- The need for stability in sorting algorithms

Understanding these factors is key to effectively applying binary search and sorting algorithms in real-world applications.

Conclusion: The Elegant Mathematics Behind Computational Efficiency

The intricate dance between mathematics and computer science is beautifully exemplified in binary search and sorting algorithms. Understanding the mathematical principles that drive these algorithms is crucial for anyone working in the field of computer science.

The efficiency of algorithms such as binary search, merge sort, and quick sort is rooted in mathematical concepts like logarithmic growth and recurrence relations. By grasping these concepts, developers can optimize their code for better performance, leveraging the power of mathematics in computer science to achieve computational efficiency.

Calculating algorithm efficiency is a critical skill that allows developers to compare different algorithms and choose the most suitable one for a given task. Whether it’s through sorting algorithms explanation or understanding the intricacies of binary search, a strong foundation in mathematical principles is essential.

As computer science continues to evolve, the importance of understanding the mathematical underpinnings of algorithms will only continue to grow. By embracing this knowledge, developers and researchers can unlock new levels of efficiency and innovation in their work.