Imagine being able to predict user behavior, classify complex data, or make informed decisions with the help of machine learning. This rapidly evolving field is transforming industries, from healthcare to finance, by leveraging advanced mathematical concepts. At its core, machine learning relies heavily on linear algebra and statistics to develop and refine its algorithms.

The significance of understanding the algorithms behind machine learning cannot be overstated. As we continue to generate vast amounts of data, the need for professionals who can interpret and apply this data using machine learning techniques is growing. By grasping the fundamentals of linear algebra and statistics, individuals can unlock the full potential of machine learning and drive innovation.

Key Takeaways

- Machine learning relies on linear algebra and statistics to develop its algorithms.

- Understanding the algorithms behind machine learning is crucial for its effective application.

- Linear algebra and statistics are fundamental to making informed decisions in various industries.

- The demand for professionals with expertise in machine learning is on the rise.

- Grasping machine learning fundamentals can drive innovation and growth.

The Mathematical Foundation of Machine Learning

Understanding the mathematical underpinnings of machine learning is crucial for developing robust AI systems. The significance of mathematics in AI development cannot be overstated, as it provides the theoretical foundations upon which machine learning algorithms are built.

Why Mathematics Matters in AI Development

Mathematics is essential in AI development because it enables the creation of algorithms that can learn from data, identify patterns, and make predictions. Linear algebra and statistics are particularly important, as they provide the tools necessary for data analysis and model development.

The Two Pillars: Linear Algebra and Statistics

Linear algebra and statistics are the two mathematical disciplines that underpin machine learning. Linear algebra provides the framework for manipulating vectors and matrices, which is crucial for many machine learning algorithms. Statistics, on the other hand, offers the methods necessary for understanding data distributions and making inferences.

How These Fields Complement Each Other

Linear algebra and statistics complement each other by providing a comprehensive toolkit for machine learning. For instance, linear regression, a fundamental algorithm in machine learning, relies on both linear algebra for its mathematical formulation and statistics for understanding the underlying data distribution.

By combining linear algebra and statistics, machine learning practitioners can develop sophisticated models that are capable of complex data analysis and prediction tasks.

The Algorithms Behind Machine Learning: How Linear Algebra and Statistics Play a Crucial Role

Linear algebra and statistics are not just theoretical concepts; they are the driving forces behind the algorithms that power machine learning. The intersection of mathematics and computer science has given rise to innovative AI systems that can learn, reason, and interact with humans.

The Intersection of Mathematics and Computer Science

The field of machine learning is deeply rooted in mathematical disciplines. Linear algebra provides the framework for representing and manipulating data, while statistics offers the tools for making inferences and predictions from that data. This synergy enables the development of sophisticated algorithms that can analyze complex patterns and make informed decisions.

From Mathematical Theory to Practical Applications

The translation of mathematical theories into practical machine learning algorithms is a nuanced process. It involves optimizing mathematical models for computational efficiency and ensuring that they can be trained on large datasets. Techniques such as gradient descent and optimization algorithms are crucial in this regard.

Real-world Examples of Math-Driven AI

Several real-world applications demonstrate the power of math-driven AI. For instance, image recognition systems rely heavily on linear algebra to process and understand visual data. Similarly, predictive analytics in finance and healthcare utilize statistical models to forecast outcomes and make informed decisions.

- Image and speech recognition

- Predictive analytics in finance and healthcare

- Natural Language Processing (NLP)

These examples underscore the importance of understanding the mathematical foundations of machine learning. By leveraging linear algebra and statistics, developers can create more accurate, efficient, and robust AI systems.

Essential Linear Algebra Concepts for Machine Learning

Understanding linear algebra is crucial for machine learning, as it provides the tools needed to manipulate and analyze data effectively. Linear algebra enables the representation of complex data structures in a simplified manner, facilitating the development of algorithms that can learn from this data.

Vectors and Vector Spaces

Vectors are fundamental objects in linear algebra, used to represent quantities with both magnitude and direction. In machine learning, vectors are often used to represent features of data. For instance, in image classification, each pixel’s color intensity can be represented as a vector. Vector spaces are collections of vectors that can be added together and scaled, providing a framework for manipulating these representations.

Matrices and Matrix Operations

Matrices are another critical concept, used to represent systems of equations and transformations. In machine learning, matrices are used extensively for data representation and manipulation. Operations such as matrix multiplication are essential for algorithms like neural networks. Matrix factorization techniques, such as Singular Value Decomposition (SVD), are used for dimensionality reduction and feature extraction.

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are scalar and vector quantities that represent how a linear transformation changes a vector. In machine learning, they are used in Principal Component Analysis (PCA) for dimensionality reduction. Eigenvectors determine the directions of new features, while eigenvalues determine their importance.

Singular Value Decomposition (SVD)

SVD is a factorization technique that decomposes a matrix into three matrices, revealing the underlying structure of the data. It’s widely used in recommendation systems and image compression.

“SVD is a powerful tool for understanding the underlying structure of data, enabling efficient data compression and feature extraction.”

By applying SVD, machine learning algorithms can reduce noise and improve performance.

In conclusion, linear algebra provides the foundational tools necessary for machine learning. Understanding vectors, matrices, eigenvalues, eigenvectors, and techniques like SVD is essential for developing and implementing machine learning algorithms effectively.

Key Statistical Concepts Powering Machine Learning

Statistical concepts form the backbone of machine learning, enabling algorithms to learn from data. Understanding these concepts is crucial for developing effective models that can make accurate predictions and decisions.

Probability Distributions and Random Variables

Probability distributions and random variables are fundamental to statistical analysis in machine learning. A probability distribution describes the probability of different values or ranges of values that a random variable can take. Common distributions include the normal distribution, binomial distribution, and Poisson distribution.

Understanding probability distributions is essential for modeling real-world phenomena and making predictions. For instance, the normal distribution is widely used in machine learning to model continuous data.

Statistical Inference and Hypothesis Testing

Statistical inference involves making conclusions about a population based on a sample of data. Hypothesis testing is a critical aspect of statistical inference, allowing us to test hypotheses about the underlying data distribution.

Hypothesis testing involves formulating a null and alternative hypothesis, calculating a test statistic, and determining the p-value to decide whether to reject the null hypothesis.

Bayesian Statistics in Machine Learning

Bayesian statistics provides a framework for updating probabilities based on new data. Bayesian methods are particularly useful in machine learning for tasks such as parameter estimation and model selection.

Maximum Likelihood Estimation

Maximum Likelihood Estimation (MLE) is a technique used to estimate the parameters of a statistical model. MLE involves finding the values of the model parameters that maximize the likelihood of observing the data.

The following table summarizes key aspects of MLE:

| Concept | Description | Application in Machine Learning |

|---|---|---|

| Likelihood Function | A function that describes the probability of observing the data given the model parameters. | Used in parameter estimation. |

| Parameter Estimation | The process of finding the model parameters that maximize the likelihood function. | Critical in model training. |

| Optimization Techniques | Methods used to maximize the likelihood function, such as gradient descent. | Essential for efficient model training. |

By understanding and applying these statistical concepts, machine learning practitioners can develop more accurate and robust models.

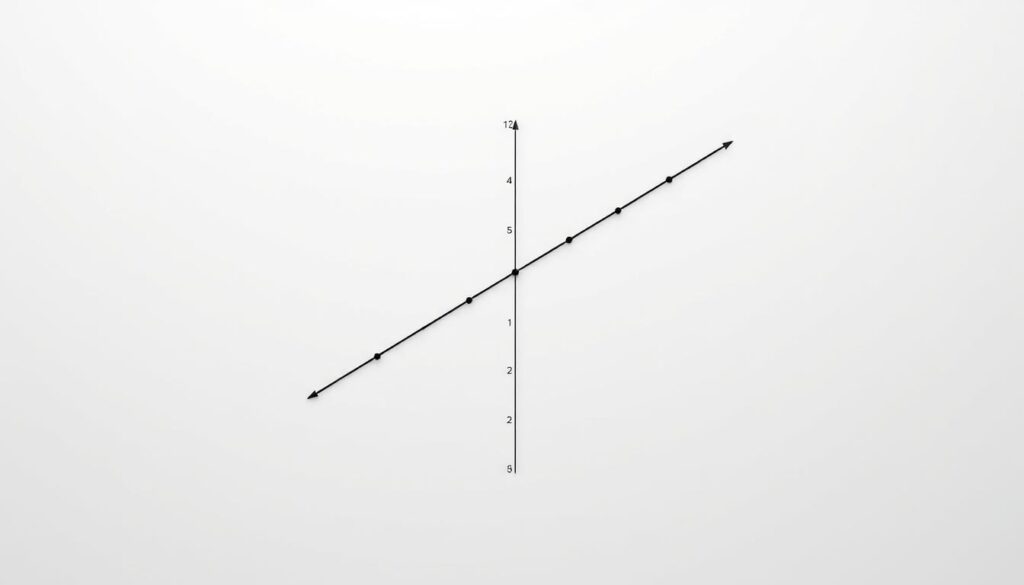

Linear Regression and Classification Algorithms

Machine learning algorithms, particularly linear regression and classification, are deeply rooted in mathematical principles, making a grasp of these concepts essential. Linear regression is used for predicting continuous outcomes, while classification algorithms are used for categorical outcomes.

The Mathematics Behind Linear Regression

Linear regression involves modeling the relationship between a dependent variable and one or more independent variables using a linear equation. The coefficients of this equation are determined through a process that minimizes the sum of the squared errors between observed responses and predicted responses. Linear algebra plays a crucial role here, as it provides the methods for solving these equations efficiently.

Logistic Regression: From Linear Algebra to Classification

Logistic regression is a classification algorithm that uses a logistic function to predict probabilities. It is based on the principles of linear regression but is adapted for binary classification problems. The mathematics behind logistic regression involves using linear algebra to compute the probabilities and then applying a threshold to classify the outcomes.

Support Vector Machines: Geometric Intuition

Support Vector Machines (SVMs) are another type of classification algorithm that aim to find the hyperplane that maximally separates the classes in the feature space. The geometric intuition behind SVMs involves understanding how to maximize the margin between classes, which is crucial for effective classification.

Implementing These Algorithms in Practice

In practice, implementing linear regression, logistic regression, and SVMs involves using libraries and frameworks that provide optimized implementations of these algorithms. For instance, libraries like Scikit-learn in Python offer efficient and easy-to-use implementations.

| Algorithm | Primary Use | Key Mathematical Concept |

|---|---|---|

| Linear Regression | Continuous Outcome Prediction | Minimizing Squared Errors |

| Logistic Regression | Binary Classification | Logistic Function |

| Support Vector Machines | Classification | Maximizing Margin |

Understanding the mathematical foundations of these algorithms is crucial for their effective application in machine learning tasks. By leveraging linear algebra and statistical concepts, practitioners can develop robust models that accurately predict outcomes.

Dimensionality Reduction and Feature Extraction

In the realm of machine learning, dimensionality reduction plays a pivotal role in enhancing model performance. By simplifying complex datasets, dimensionality reduction techniques enable faster processing, reduced noise, and improved model interpretability.

Principal Component Analysis (PCA)

PCA is a widely used technique for dimensionality reduction that transforms high-dimensional data into a lower-dimensional space by selecting the principal components that capture the most variance.

Key benefits of PCA include:

- Reducing noise and irrelevant features

- Improving data visualization

- Enhancing model performance

Linear Discriminant Analysis (LDA)

LDA is another dimensionality reduction technique that focuses on finding linear combinations of features that best separate classes of data. Unlike PCA, LDA is a supervised method that considers class labels.

LDA is particularly useful for:

- Classification problems

- Data with multiple classes

t-SNE and UMAP: Beyond Linear Methods

t-SNE (t-distributed Stochastic Neighbor Embedding) and UMAP (Uniform Manifold Approximation and Projection) are non-linear dimensionality reduction techniques. They are particularly effective for visualizing high-dimensional data in a two-dimensional or three-dimensional space.

When to Use Each Technique

The choice of dimensionality reduction technique depends on the dataset and the specific requirements of the project.

- Use PCA for exploratory data analysis and when the data is largely linear.

- Opt for LDA when dealing with classification problems and class labels are available.

- Apply t-SNE or UMAP for non-linear data and when visualization is a priority.

By understanding and applying these dimensionality reduction techniques, machine learning practitioners can significantly improve the quality and performance of their models.

Neural Networks and Deep Learning Mathematics

Understanding the algorithms behind machine learning requires a deep dive into the mathematics of neural networks. Neural networks are a fundamental component of deep learning, a subset of machine learning that has revolutionized the field of artificial intelligence.

The mathematical foundations of neural networks are complex and multifaceted, involving various branches of mathematics. One of the critical mathematical disciplines underlying neural networks is linear algebra, particularly matrix operations.

Matrix Operations in Neural Networks

Matrix operations are crucial in neural networks as they enable the efficient computation of neural network layers. Matrix multiplication is used to compute the output of each layer, making it a fundamental operation in the training process of neural networks.

For instance, consider a simple neural network layer with an input vector \(x\), weights matrix \(W\), and bias vector \(b\). The output \(y\) of this layer is computed as \(y = Wx + b\), a straightforward application of matrix operations.

Backpropagation and Gradient Descent

Another critical aspect of neural network mathematics is backpropagation, an algorithm used to train neural networks. Backpropagation relies on gradient descent, an optimization technique that minimizes the loss function by adjusting the network’s weights.

“Backpropagation is a method for computing the gradient of the loss function with respect to the network’s weights, enabling the efficient training of deep neural networks.” –

Activation Functions and Their Mathematical Properties

Activation functions introduce non-linearity into neural networks, allowing them to learn complex relationships. Common activation functions include the sigmoid, ReLU (Rectified Linear Unit), and tanh functions, each with its mathematical properties.

Convolutional Networks: Specialized Linear Algebra

Convolutional Neural Networks (CNNs) utilize specialized linear algebra operations, such as convolution and pooling. These operations enable CNNs to efficiently process data with spatial hierarchies, like images.

| Operation | Description | Mathematical Basis |

|---|---|---|

| Convolution | Scans input data with a filter | Linear algebra, matrix operations |

| Pooling | Downsamples data to reduce dimensions | Aggregation of values |

The intricate mathematics underlying neural networks and deep learning models is what enables their powerful capabilities. By understanding these mathematical structures, developers can design more efficient and effective AI systems.

Practical Implementation and Tools

To bring machine learning models to life, developers rely on a suite of powerful tools and frameworks. The implementation of machine learning algorithms is deeply rooted in linear algebra and statistics, and having the right libraries can significantly streamline the development process.

NumPy and SciPy for Mathematical Operations

NumPy is the foundation for most scientific computing in Python, providing support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays. SciPy builds on NumPy, offering functions for scientific and engineering applications, including signal processing, linear algebra, and statistics.

Statistical Libraries: Statsmodels and Scikit-learn

Statsmodels is a Python module that provides classes and functions for the estimation of many different statistical models, as well as for conducting statistical tests, and statistical data exploration. Scikit-learn is a machine learning library that provides a wide range of algorithms for classification, regression, clustering, and more, along with tools for model selection, data preprocessing, and feature selection.

Deep Learning Frameworks: TensorFlow and PyTorch

TensorFlow is an open-source software library for numerical computation, particularly well-suited and fine-tuned for large-scale Machine Learning (ML) and Deep Learning (DL) tasks. PyTorch is another popular open-source ML library that provides a dynamic computation graph and is particularly known for its simplicity and flexibility.

Optimizing Mathematical Computations

Optimizing mathematical computations is crucial for the efficient implementation of machine learning algorithms. This involves leveraging the capabilities of libraries like NumPy and SciPy, as well as understanding how to use deep learning frameworks like TensorFlow and PyTorch effectively. By combining these tools with a solid understanding of linear algebra and statistics, developers can create highly efficient and scalable machine learning models.

Conclusion

Understanding the math behind machine learning is crucial for developing effective algorithms. Linear algebra and statistics form the foundation of machine learning, enabling practitioners to build robust models that drive business value.

By grasping key concepts such as vector spaces, eigenvalues, and probability distributions, professionals can unlock the full potential of machine learning. Tools like NumPy, SciPy, and deep learning frameworks such as TensorFlow and PyTorch make it easier to implement these mathematical concepts in practice.

As machine learning continues to evolve, understanding the underlying math will remain essential for innovation and problem-solving. By focusing on machine learning fundamentals, including understanding the math in machine learning, practitioners can stay ahead of the curve and drive meaningful impact in their organizations.